Say that all of humanity – past, present and foreseeable future – has a dollar to spend on carbon-fueled economic growth. Those of us that have reaped the industrial world’s benefits doled out four bits or so from 1750 to 2008, and some of those investments paid off handsomely. Standards of health, education and material living rose. And the global digital network emerged.

Fair to say, these expenditures largely preceded any broad realization that the carbon go-go days might be a passing phase. But that is no longer the case. The total bank – set, not by resource limits, but by the planet’s capacity for waste absorption – has been counted. One need not embrace central planning to wonder how a pragmatic and just CFO might eye the remaining balance.

This is an excerpt from my June 2009 article, “The Story of the Trillion Tons of Carbon” (i.e., metric tons or, alternatively, tonnes). At the time, two papers published in Nature offered the first detailed examination of the relationship between cumulative carbon emissions and global temperature increases. The gist is that if human-engendered temperature increases are to be kept under the internationally agreed upon target of 2°C (3.6°F), then cumulative carbon emissions must be no more than a trillion metric tons. Actually, less than a trillion, if one accounts for other greenhouse gases. And half the budget has already been spent.

In the summer of 2012, Bill McKibben called this realization, “humanity’s terrifying new math.”

This year, carbon calculations are in the spotlight again, having made it into the Intergovernmental Panel on Climate Change (IPCC) final draft report (on pages 63-4 of the 2,216-page Complete Underlying Scientific/Technical Assessment) and Summary for Policymakers (pdf).

Last week’s New Scientist (“IPCC digested: Just leave the fossil fuels underground,” by Michael Le Page):

Merely reducing emissions is not enough. It will slow climate change, but in the end how much the planet warms depends on the overall amount of CO2 we pump out. To have any chance of limiting the global temperature rise to 2 °C, we have to limit future emissions to about 500 gigatonnes of CO2. Burning known fossil fuel reserves would release nearly 3000 gigatonnes, and energy companies are currently spending $600 billion trying to find more.

The implications of the numbers are staggering. The value of these companies depends on their reserves. If at some point in the future the world gets serious about tackling climate change, these reserves will become worthless. About $4 trillion worth of shares would be wiped out, according to the non-profit Carbon Tracker Initiative. For most of us, that’s our pension funds at risk.

The NYT has the first piece I’ve seen on the IPCC’s backroom political negotiations (“How to Slice a Global Carbon Pie?,” by Justin Gillis):

The scientists had wanted to specify a carbon budget that gave the best chance of keeping temperatures at the 3.6 degree target or below. But many countries felt the question was related to risk — and that the issue of how much risk to take was political, not scientific. The American delegation suggested that the scientists lay out a range of probabilities for staying below the 3.6-degree target, not a single budget, and that is what they finally did.

The original budget is in there. But the adopted language gives countries the possibility of a much larger carbon pie, if they are willing to tolerate a greater risk of exceeding the temperature target.

It’s worth asking: Since there are already targets of 2°C and of 350 (parts per million CO2 in the atmosphere) — the latter serving as the centerpiece of a global campaign — what’s the meaning of another target?

One difference is that the trillion tonne target raises inescapable questions of climate justice — difficult questions. After all, who has benefited from the first half-trillion tonnes? What individuals, what nations, and what generations? And who will benefit from the next half? These types of questions are not as transparent in the other two targets.

Back in 2009, my trillion tonne story highlighted Henry Shue’s writings on “distributive justice.” And as all eyes turned to the upcoming Copenhagen Climate Conference, I edited and published a series of articles on climate justice by Peter Singer, Dale Jamieson, Steve Vanderheiden, Kathleen Dean Moore, Paul Baer, and Stephen Gardiner.

Now that the trillion tonnes has been recognized by the IPCC, these voices are more critical than ever.

At the same time, it’s also worth thinking carefully about this word “target.” Nearly fifty years ago, Martin Luther King assured us of a long bend toward justice. I might be mistaken, but what I don’t recall is that Dr. King ever described a target-based management approach for getting there.

See also: critiques of target-based management, in other contexts, by Buzz Holling and Ray Ison.

Numerous climate commentators — from economist

Numerous climate commentators — from economist

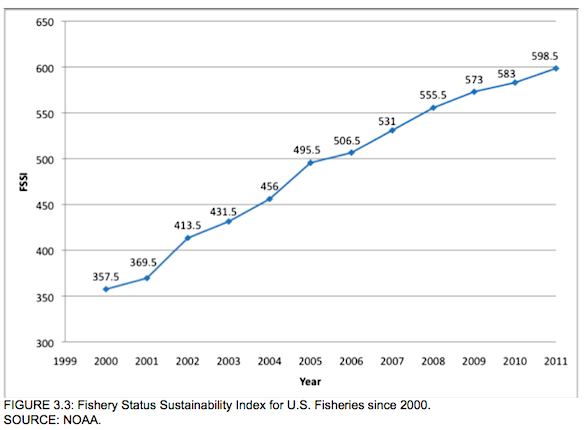

The U.S. National Ocean and Atmospheric Administration

The U.S. National Ocean and Atmospheric Administration